LLVM is a compiler-writer's toolkit that purports to make it easy to implement new languages without getting bogged down in lots of machine-specific details like Instruction Set Architectures, or theoretic details like graph-coloring, register-spill algorithms and peephole optimizations.

I've had this dream of building a LISP dialect that compiles to machine code, and produces small and fast static binaries. For the first half of this year, I've mostly been reading (hence the low volume of updates despite what my New Year's Resolution promised). Having so immersed myself in the literature on language design and compiler construction, from the "purest" academic subjects of language formalisms to the in-the-trenches practice of assembler programming (yes, people still do that and it's awesome), I think I'm finally ready to start programming.

So, LLVM.

The thing most people talk about with LLVM is its Intermediate Representation. They talk about it so much that they just call it IR for short.

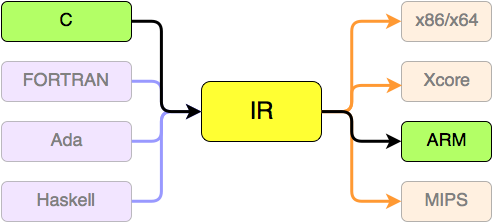

The IR provides a single common middle ground that all the front-end compiler modules generate. The back-end modules consume the IR and emit whatever the hell they want and/or need to. For example, the static compiler llc can read in IR and generate an executable machine code image for your specific processor.

Front-ends are on the left, back-ends on the right.

The IR frees up the front-end module authors from having to worry about the specifics of the target back-end. Likewise, back-end module authors, who may only be well-versed in the performance / optimization constraints of a specific processor family, don't need to worry about source-language concerns.

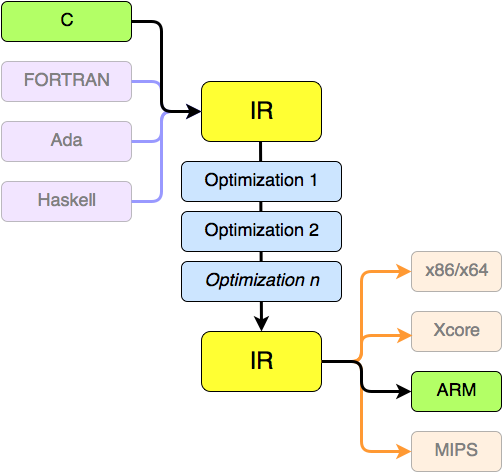

Using an intermediate representation also allows pipelining of modules that both consume and emit IR. Peephole optimization is a particularly lucid example. Consider the following assembler snippet:

movl %ebx, %eax ; put the contents of EBX into EAX

movl $0, %eax ; put the literal value 0 into EAX

No human would ever write this, but a generative compiler might, especially if these two statements saddle the gap between two generated templates. A peeophole optimizer's job is to look at the above and determine that the first instruction is useless. Why should the machine bother transfering the contents of the EBX register into the EAX register, only to immediately overwrite that value with 0?

Really then, the above diagram looks more like this:

This architecture makes it seemingly trivial to add new front-ends, back-ends and optimization passes, and the language design and compiler-writing community has risen to the challenge.

LLVM can translate C/C++, Haskell, Swift, Ada, D, Delphi, Fortran and Objective-C.

It can target object and machine code for x86, ARM, PowerPC, MIPS, SPARC, and the IBM zSeries of mainframes.

The optimization stages (called passes) are even more impressive. Here's just a few:

- Dead Code Elimination

- Constant Propagation

- Constant Folding

- Loop-invariant Migrations

- Loop Unrolling

- Tail Call Elimination

- Redundant Instruction Combination

- ... and more

Onward ------

Where to next?

For the motivated reader, here's some additional resources to satisfy your curiosity:

- Introduction to the LLVM Compiler System (slides) - For users

- The Architecture of Open Source Applications: LLVM - For hackers

- llvm.org is full of information both current and historical.

- Kaleidascope - a hands-on LLVM Turtorial

For my part, I'll be attempting to write follow-up essays in this series, covering the following topics:

- Writing, assembling and linking raw IR code

- Building a simple lexer/scanner for LISP-like languages

- Abstract Syntax Tree Annotation and Data Flow