Using iptables to Emulate Network Conditions

A few weeks ago, I ran into an interesting bug in some production daemon code that was being caused by weird network behavior.

This is what was happening on the wire (courtesy of tcpdump).

Addresses and ports have been changed to protect the guilty, and

some whitespace added to improve readability.

1 0.000000 10.0.1.8 -> 10.0.0.5 TCP 74 33892 > 1234 [SYN] Seq=0 Win=5840 Len=0

2 0.000004 10.0.0.5 -> 10.0.1.8 TCP 74 1234 > 33892 [SYN, ACK] Seq=0 Ack=1 Win=5792 Len=0

3 0.000466 10.0.1.8 -> 10.0.0.5 TCP 66 33892 > 1234 [ACK] Seq=1 Ack=1 Win=5840 Len=0

4 2.057643 10.0.1.8 -> 10.0.0.5 TCP 74 33894 > 1234 [SYN] Seq=0 Win=5840 Len=0

5 2.057656 10.0.0.5 -> 10.0.1.8 TCP 74 1234 > 33894 [SYN, ACK] Seq=0 Ack=1 Win=5792 Len=0

6 2.058656 10.0.1.8 -> 10.0.0.5 TCP 66 33894 > 1234 [ACK] Seq=1 Ack=1 Win=5840 Len=0

7 15.614608 10.0.1.8 -> 10.0.0.5 TCP 74 33899 > 1234 [SYN] Seq=0 Win=5840 Len=0

8 15.614615 10.0.0.5 -> 10.0.1.8 TCP 74 1234 > 33899 [SYN, ACK] Seq=0 Ack=1 Win=5792 Len=0

9 15.614991 10.0.1.8 -> 10.0.0.5 TCP 74 33899 > 1234 [ACK] Seq=1 Ack=1 Win=5840 Len=0

That pattern continues. A SYN packet from the client, the

SYN+ACK response, and the final ACK to nail up the

connection, and then... nothing.

The upshot for the server is that the finite number of connection slots in the daemon's memory structures filled up over time with essentially dead connections from hosts like this one. The fix was easy — add a timer to each slot and eject clients that tarry for too long without sending us any real data.

But that's not the point of this post.

The interesting part of the whole ordeal was reproducing the network issue post-incident, to ensure that my proposed fix to the daemon connection-handling logic would withstand hours of such misbehavior.

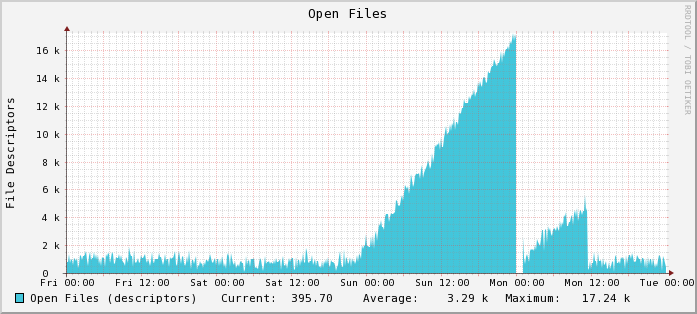

The problem is we don't know exactly what happened. Graphs of per-process open file descriptor usage helped us to poinpoint when the daemon started leaking fds. We think we know why it happened. We know that a hypervisor / virtual switch upgrade was taking place around the same time as the ramp-up period. We also know that we had seemingly unrelated network outages in backbone connectivity to other data centers.

Literally all we have to go on is the tcpdump we took while things were broken. The challenge was to introduce network outage in a controlled fashion, inside of our development infrastructure.

Naturally, I turned to iptables, which can do more than just

keep out intruders. As a consummate inspector of packets, it can

be used to emulate specific network problems, with a surprising

degree of accuracy.

For starters, here's the shell script I start all iptables experimentation with:

#!/bin/sh

IPTABLES="/usr/bin/sudo /sbin/iptables"

$IPTABLES -F

# rules go here

$IPTABLES -L -nv

echo

echo "The firewall is up. Ctrl-C if want to keep it up;"

echo "otherwise it will be automatically dropped in 4 minutes."

sleep 240

echo "Dropping firewall (failsafe)"

$IPTABLES -F

echo "Dropped."

Whenever I deal with firewalls, especially on virtual machines and remote physical servers where it can be difficult to get a non-networked console, I build in a deadman switch. After four minutes, without human intervention, the nascent firewall will be dropped. This ensures that if I do bork the connection all up (a distinct possibility), the box will "fix" itself after some time. Four minutes is enough time to switch to another terminal and verify that I can get back in over SSH.

In my case, my dev environment is set up with lots of other machines that are constantly connecting to my dev server, just like it was a prod service. As such, I won't be going into generating network load or anything like that.

We'll start off with (incorrect) rules that outright block a single host in our dev network, and verify that everything else is kosher:

VICTIM=10.44.0.6

PORT=1234

$IPTABLES -p tcp --dport $PORT --src $VICTIM -j DROP

That works (except that it doesn't). If we raise that firewall (and Ctrl-C to make it permanent), we should see that connections from our hapless victim at 10.44.0.6 are being silently dropped; no RST packet will be sent back.

Cool. But that's not the observed behavior. We were seeing the

SYN / SYN+ACK / ACK three-way handshake complete, we just weren't

seeing any data packets. Enter the --tcp-flags option.

Activated whenever you set the protocol match to TCP (via -p

tcp), this handy option lets you accept or reject packets based

on the TCP flags set in each. It takes two arguments. The first

is the set of flags you want to consider, given by their symbolic

name (SYN ACK URG PSH etc.). The second is the subset of

those that must be set for the rule to match.

The canonical example (and about the only one you'll find by

googling it) is BADFLAGS. Some flag combinations are nonsensical.

Take SYN+FIN and SYN+RST for example. "Set me up a connectiont

that should be torn down".

Here's the full rule:

iptables -N BADFLAGS

iptables -A BADFLAGS -j LOG --log-prefix "BADFLAGS: "

iptables -A BADFLAGS -j DROP

iptables -N TCP_FLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ACK,FIN FIN -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ACK,PSH PSH -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ACK,URG URG -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags FIN,RST FIN,RST -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags SYN,FIN SYN,FIN -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags SYN,RST SYN,RST -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ALL ALL -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ALL NONE -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ALL FIN,PSH,URG -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ALL SYN,FIN,PSH,URG -j BADFLAGS

iptables -A TCP_FLAGS -p tcp --tcp-flags ALL SYN,RST,ACK,FIN,URG -j BADFLAGS

That's not how we want to use this flag, though. What we need

to do is allow SYN, SYN+ACK and ACK, but disallow most everything

else (including the FIN packet and any data packets).

More formally, we should:

- Allow any packet with the

ACKflag set - Allow any packet with the

SYNflag set - Drop everything else

This translates quite nicely into our iptables calls:

VICTIM=10.44.0.6

PORT=1234

$IPTABLES -p tcp --dport $PORT --src $VICTIM --tcp-flags ALL SYN -j ACCEPT

$IPTABLES -p tcp --dport $PORT --src $VICTIM --tcp-flags ALL ACK -j ACCEPT

$IPTABLES -p tcp --dport $PORT --src $VICTIM -j DROP

Perfect!

If we run a tcpdump with this firewall up, we should see the same

SYN / SYN+ACK / ACK pattern we saw from our production packet capture.

iptables is a flexible filter that operates on packets. When combined with the insight gained from tcpdump, you can turn it into a powerful development tool.